Photo by Natalie Muyres prompting ChatGPT, September 2024

Hey, ChatGPT, are you intelligent?

“My ‘intelligence’ is limited to the data and algorithms used to create me, which allows me to perform language-related tasks effectively. I can provide information, answer questions, and assist with various queries, but my understanding is fundamentally different from human intelligence.”

ChatGPT, July 17, 2024

The concept of intelligence is commonly understood as the ability to acquire knowledge and / or skills, and, to learn and understand one’s environment or situation.1

According to ChatGPT, intelligence is also associated with problem solving, adapting, reasoning, and perceived and interpreted sensory input. The act of gathering intelligence is utilized everywhere, including in personal decision-making, business strategy, and military operations. Intelligence is used to determine why situations have emerged the way they have, decide how to address them, by whom, and when.

Simply, intelligence is needed to identify the emergent threats and opportunities around us and to figure out what to do about them.

Extending our Horizons of Caring

Whose threats and opportunities are we talking about though? Our own? Our family’s? Our organizations? Our nations? For humanity?

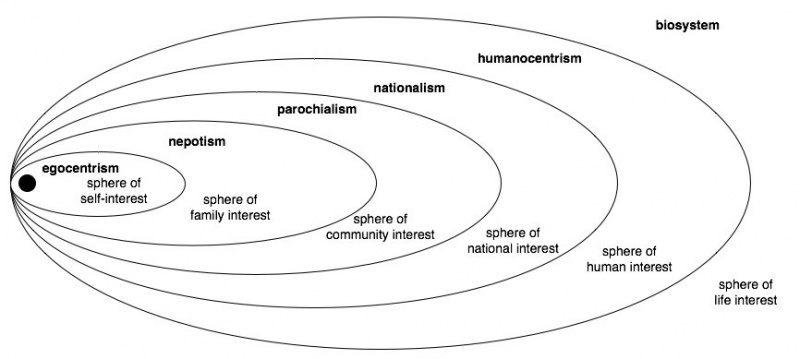

In Human Learning Ecology, we use a construct called “Biosocial Horizon Line-Boundaries of Caring” to help us identify and reflect on who and what we are willing to care about (see Figure 1). It spans from our own self-interest (on the left) to the sphere of life (on the right).

When we extend the boundaries of our caring toward the sphere of life, we also expand our intelligence gathering or situational understanding. This, in turn, makes us better able to identify and respond to a greater range of threats and opportunities.

Ideally, if we collectively recognize we are embedded within life and natural systems we will structure our thinking, caring, and acting in ways that do no harm to life or the future of life on the planet. Right? Well, it’s a big ask.

Deciding how to spend one’s free time on the weekend, for example, requires much less intelligence gathering, and is a much less complex decision than figuring out how to combat the climate crisis. The more complex the issue, the more inquiry, or situational understanding, is required.

Take the climate crisis for instance. When individuals, organizations, and nations extend their boundaries of caring, the corresponding requirements of intelligence and situational understanding do not end with what is good for us personally, but involve understanding what is most needed to protect all of life, and the generations to come, from global warming.

At the Human Venture Institute, we call this kind of intelligence gathering – where the boundaries of caring are extended to ensure all life flourishes, not just ourselves, our organizations, or our nations – systemic adaptive intelligence.

Systemic Adaptive Intelligence

What is “intelligence”? We define intelligence as all of the inquiry, knowledge creation, testing, storage and communications capabilities including, language, literature, science, logic, media, etc., that humanity uses to understand threats and opportunities.

“Systemic adaptive intelligence” delineates intelligence capacities that understand, assess, and address opportunities and threats at the level of all life systems. The word systemic is used deliberately to distinguish between the intelligence needed to address opportunities and threats at the sphere of life from intelligence required at other biosocial horizon lines (see Figure 1).

Systemic adaptive intelligence seeks to understand the current situation, the history and past causal factors, and possible scenarios into the future. It strives to ensure that our knowledge and information closely matches what is actually going on, and continually tests and questions facts and stories to confirm that they correspond with reality – essentially, that the map matches the terrain.

A fantastic example of humanity striving for systemic adaptive intelligence is the World Health Organizations’ Hub for Pandemic and Epidemic Intelligence – an initiative created in 2021 to improve global surveillance and response to disease. Without understanding how, where, and why disease spreads, humanity is unable to respond in a timely and effective manner. We have also learned many lessons from the COVID-19 pandemic about the harm and suffering that can be caused when decision-making is based on false and misleading information.

Artificial Intelligence

So, what about artificial intelligence? How does it help humanity understand our greatest threats and opportunities and what is most needed for all life systems?

“Today our supposedly revolutionary advancements in artificial intelligence are indeed cause for both concern and optimism. Optimism because intelligence is the means by which we solve problems. Concern because we fear that the most popular and fashionable strain of A.I. — machine learning — will degrade our science and debase our ethics by incorporating into our technology a fundamentally flawed conception of language and knowledge.”Chomsky et al., 2023

In the first article in this series on AI, ChatGPT’s response to our question about the potential risks of AI was that a positive or negative outcome depended on AI regulation, source data, and whether AI technologies will be misused.

Therefore, AI’s ability to inform a systemic adaptive approach, meaning adaptive for life and humanity, is dependent on the source and use of data. “Thus at least for now, Al’s influence is not so much a function of either emulating or surpassing human intelligence, but shaping the way we think, learn, and make decisions,” wrote Tomas Chamorro-Premuzic, organizational psychologist (2023, 21).

Today and into the future, the intelligence we gather from AI is only as good, or adaptive, as the source data itself – and how AI learns from this data. If AI provides a flawed understanding of reality (or is designed to manipulate reality) and we don’t test or validate its output, the risk of making flawed decisions is high.

This isn’t a great concern if you’re using ChatGPT to find a suitable recipe for dinner, but it is of great concern if AI is being used to exploit individuals, disrupt democratic voting, or respond to nuclear threat.2

The question we are asking at the Human Venture is, how will the proliferation of AI impede or accelerate our ability to meet humanity’s greatest needs?

The physicist Max Tegmark asked if we should give AI goals, and if so, whose goals do we give it? How are our goals determined? By whom? For whom? “These questions are not only difficult, but also crucial for the future of life: if we don’t know what we want, we’re less likely to get it, and if we cede control to machines that don’t share our goals, then we’re likely to get what we don’t want,” wrote Tegmark (2017, 249).

Consider an AI designed with the goal of manipulating the stock market for individual and/or organizational gain. The outcome could be a market crash and global economic disruption affecting entire nations around the world. Or what about the current concern for how much energy is required to power AI, what are the climate implications for life systems?

Determining our “goals” requires us to think about for whom – what horizon of caring are we designing goals for? Look at Figure 1 again. Goals are required at each boundary line. Because individuals, organizations, and nation states are nested within the boundaries of life and humanity, the goals we set at one level will impact those at other levels.

Designing AI for Humanity’s Needs

Since the design of artificial intelligence is dependent on our own interpretations of reality and deciding what is most needed (giving it goals), we must ask if we have equipped humans with the capacities to seek and understand reality as accurately as we can. If not, what safeguards are required to eliminate bias and counteract narrow horizons of caring that can cause harm? What incentives are required to examine our ignorance and expand our horizons of caring?

As AI researcher Blaise Agüera y Arcas said, “We’ve talked a lot about the problem of infusing human values into machines. I actually don’t think that that’s the main problem. I think that the problem is that human values as they stand don’t cut it. They’re not good enough” (Christian 2020, 94).

The question of whether AI will impede or accelerate our ability to meet humanity’s greatest needs, thus depends on how we construct our caring and thinking based on what we value.

The coming wave of artificial intelligence is not the first technological transformation we’ve experienced. We can look to cellular communications, the internet, and as far back as the Gutenberg printing press to learn how the proliferation of technology impacted humanity, in positive and negative ways.

At the Human Venture Institute we draw on Human Learning Ecology to assess questions like this – to understand the adaptive possibilities of AI but also the risks, and how to navigate the coming wave of artificial intelligence. To achieve the most adaptive outcome from the proliferation of AI, our horizons of caring should consider all of life and thus a much wider set of possible paths and implications.

As AI entrepreneur Mustafa Suleyman said, “Step back and consider what’s happening on the scale of a decade or a century. We really are at a turning point in the history of humanity” (2023, 78).

Where do we go from here? How do we equip ourselves, our organisations, and societal systems to design AI solutions that do no harm to life systems, and in turn protect us from ourselves? One place to start is to think about how we conduct ourselves, construct our values, and who and what we care about.

To learn more about Human Learning Ecology and how the Human Venture Institute is working to equip humans and society for emergent opportunities and threats, visit our website, www.humanventure.com.

Resources Cited in this Article

- Chamorro-Premuzic, Tomas. 2023. “I, Human: AI, Automation, and the Quest to Reclaim What Makes Us Unique.” Harvard Business Review Press.

- Chomsky, Noam, Ian Roberts, and Jeffrey Watumull. 2023. “Noam Chomsky: The False Promise of ChatGPT.” The New York Times.

- Christian, Brian. 2020. “The Alignment Problem, Machine Learning and Human Values.” W. W. Norton & Company.

- Suleyman, Mustafa. 2023. “The Coming Wave, Technology, Power, and the Twenty-first Century’s Greatest Dilemma.” Crown.

- Tegmark, Max. 2017. “Life 3.0: Being Human in the Age of Artificial Intelligence.” Alfred A. Knopf.

- “The Human Venture & Pioneer Leadership Journey Reference Maps” by Action Studies Institute. Calgary, Alberta, 2019.

Natalie Muyres is a Human Venture Leadership Associate and current Co-chair of the Human Venture Institute. She is an independent applied organizational and design anthropologist, consultant, project manager, and organizational change management professional in Calgary, Alberta.

Human Venture Leadership is a non-profit learning organization, providing proven educational programs that enable and grow the leadership capacities of individuals, communities and our global society. The Human Venture Institute is a research hub whose primary function is to cement and extend Human Learning Ecology.

- Meriam-Webster and Oxford English online dictionaries. ↩︎

- https://futureoflife.org ↩︎