Photo by Nahrizul Kadri on Unsplash

This is the first in a series of articles on artificial intelligence.

In this article:

- Emerging AI

- A Framework for Learning about AI

- Learning from History

- Are we in an AI Revolution?

- Resources to Support Learning

- Resources Cited in this Article

Emerging AI

Are you ready for the AI (artificial intelligence) revolution? What does readiness mean? What is a revolution? What is responsible AI? At the Human Venture Institute we’ve been considering these questions and what a future transformed by generative AI looks like.

AI is not new. If you have used iPhone’s Siri, Spotify, Google’s Alexa, or facial recognition at the US/Canada border you’ve been engaged with artificial intelligence for quite some time. AI has been on a developmental path since the 1940s and what’s emerging is the proliferation of the uses and applications for generative AI. Simply stated, generative AI utilizes curated datasets, from either public domains or custom data sources, to generate content like text and images. Generative AI recognizes patterns in the data and creates outputs based on a prompt asked of it and it can learn from the data and refine its output.

AI is being used in a variety of ways, from supporting research and writing, to finding disease, in the justice system for case law research, to streamlining oil well operations. The possibilities of AI seem endless, are exciting, but can also be concerning. In a February 2024 Leger Canada survey, more Canadians were concerned about AI tools in 2024 than they were in 2023. Concerns include data privacy, job disruption, and becoming overly dependent on AI tools. These concerns are somewhat warranted based on recent news of deep fakes like explicit photos of Taylor Swift and others and AI being used to disrupt elections in Canada and elsewhere.

Our individual and community values and beliefs, or our culture, can and will be altered by what we are exposed to, and AI is increasing the likelihood that what we see and hear is fake. So, how will this impact our decision-making capacities? Our personal relationships? Business decisions? Our actions as nations and the geopolitical environment?

A Framework for Learning about AI

For the last few months I’ve been asking, what are the opportunities and threats of generative AI for my work, my community, for humanity? How will the introduction of AI into our everyday lives change our sociocultural worlds? What learning should I be doing? What should I be paying attention to? How can Human Learning Ecology support my learning? How can I help others? This series of articles aims to explain how Human Learning Ecology can be used to understand the situation dynamics of AI developments, possible consequences, and what responsible use of AI could or should look like.

People respond well when the negative impact of a situation is visible. Once a disaster strikes, people are quick to mobilize to repair the damage and help those who are suffering but not to mobilize to prevent it in the first place.

Don Norman, “Design for a Better World” (2023)

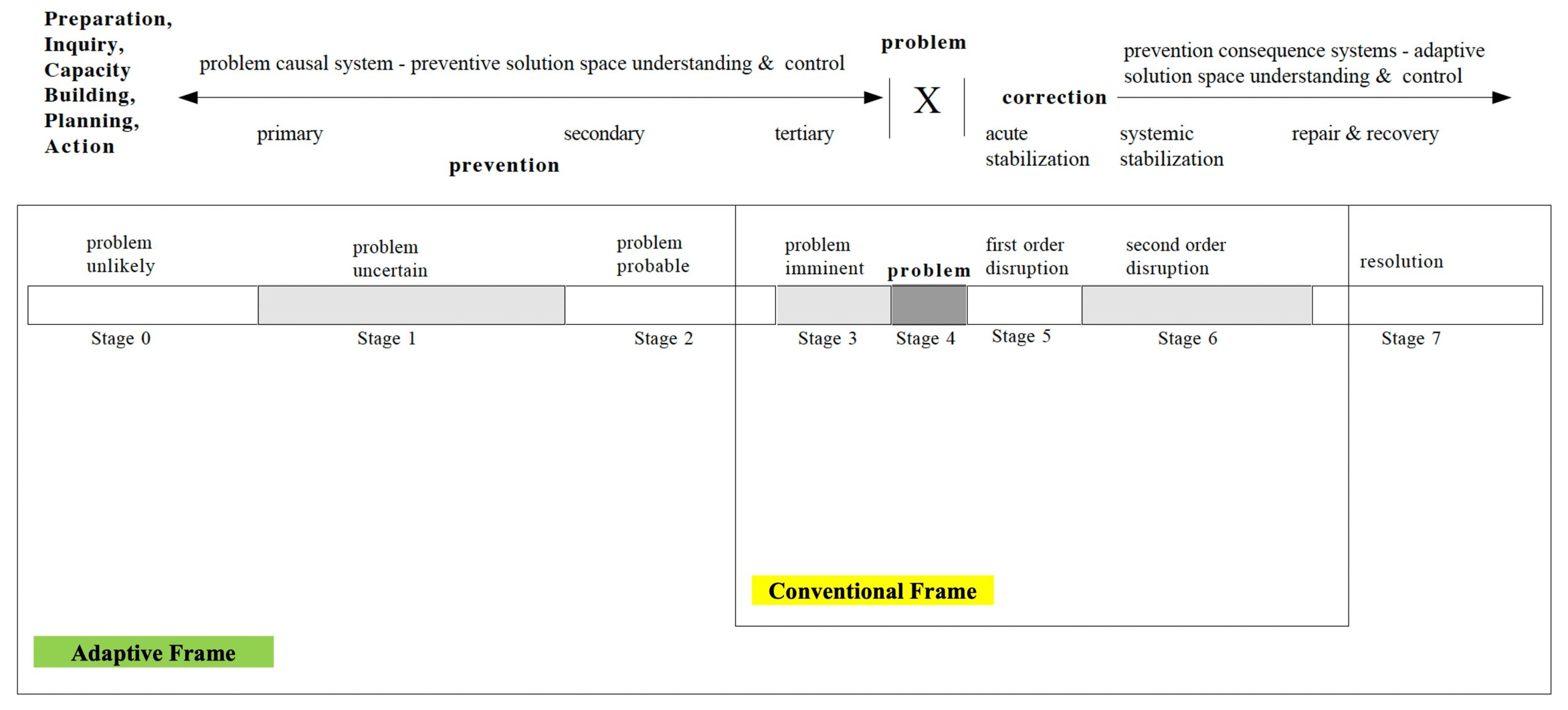

It is important to learn as much as we can so we can effectively anticipate and prevent issues but also benefit from the adaptive qualities of AI. Human Learning Ecology teaches us that conventional problem-solving addresses problems too late, it’s mostly reactionary (Figure 1). Consider “putting out fires” at work, lagging quality indicators like poor customer reviews, or crisis style planning and policy development (e.g., was the global community prepared for the COVID-19 pandemic?).

Effective and adaptive problem solving involves identifying possible issues and their causal factors for early detection and prevention. This includes engineering failure prevention, aircraft accident prevention, health promotion and disease prevention, and quality management programs. For instance, globally there are millions of commercial flights annually and the aircraft accident rate in 2023 was 1 in every 880,293 flights. To achieve this safety record, the commercial aviation sector has studied and learned from accidents and near misses to anticipate and prevent a multitude of scenarios and establish rigorous safety standards over time. The recent quality concerns regarding Boeing aircraft is a result of this error detection system failing at the organizational level, but being called out by industry as a risk.

Take the recent bridge collapse in Baltimore. It has several root causes associated with it but one of them was the size of the cargo ship DALI and the inability for the bridge support columns to withstand a direct hit. For bridge engineers in the 1970s to anticipate this scenario they needed to consider the size and impact of much larger vessels. It is likely the history of ships colliding with bridges was considered in the engineering bridge plans, but what size of ships were considered in those scenarios? What previous bridge failures did they learn from? The idea of a ship the size of DALI must have been foreign at the time, but not impossible.

Learning from History

So, what does anticipation and prevention of intentional and unintentional misuse of AI look like? What is responsible AI? What history can we draw on and learn from? There are several researchers and institutions considering the impact of AI, and the implications range from a dystopian world with humanity’s destruction to a utopian world where we are free from all work engagements. Considering the externalities of AI, or the unintended benefits and implications, requires us to ask questions about the sources of data, how the data will be used, and by whom (Acemouglu 2021; Christian 2021; Hagendorff 2022).

The inventor of the World Wide Web Tim Berners-Lee recently published his reflections on 35 years of web developments and the value and harms it has enabled in society:

[The internet] was to be a tool to empower humanity. The first decade of the web fulfilled that promise — the web was decentralised with a long-tail of content and options, it created small, more localised communities, provided individual empowerment and fostered huge value. Yet in the past decade, instead of embodying these values, the web has instead played a part in eroding them. The consequences are increasingly far reaching. From the centralisation of platforms to the AI revolution, the web serves as the foundational layer of our online ecosystem — an ecosystem that is now reshaping the geopolitical landscape, driving economic shifts and influencing the lives of people around the World.

Tim Berners-Lee (2024)

This is history we can learn from. Generative AI applications like ChatGPT are predominately drawing on data sourced from the internet. The fact that ChatGPT is well aware of the potential risks of AI tells us the global sources of data it draws this conclusion from are also concerned. Therefore, an anticipatory approach to the AI revolution should consider lessons from history, utilize this knowledge in the design, and put guardrails in place that prevent misuse.

Are we in an AI Revolution?

The renowned Economist Joel Mokyr stated, “The key to the Industrial Revolution was technology, and technology is knowledge” (2002, 29). Technical knowledge did not just become known, but accessible to the population through literacy and documentation. The growth and accessibility of new technical knowledge led to new occupations like accountants and consulting engineers (2002, 57). Mokyr concludes the Industrial Revolution created “opportunities that simply did not exist before” (2002, 77).

Popular business writing today states that our human civilization is in a Fourth Industrial Revolution, marked by “intelligent machines, of worldwide immediate access to information via the internet and related information networks” (Norman 2023, 25). AI is a result of this Fourth Industrial Revolution and will be foundational to further developments. Like the First Industrial Revolution, access to AI technology is likely to create new occupations and industry. The work ahead of us involves understanding how AI is designed, what it’s used for, who it benefits, and who it may harm – and to course correct if harm is anticipated on individuals or communities.

It’s essential to approach the AI revolution with careful consideration, ensuring that it benefits humanity as a whole while mitigating its potential risks.

ChatGPT 3.5, April 2024

So, how do we heed the advice of ChatGPT and ensure that AI benefits all of humanity? Where do we start? How do we understand the threats and opportunities of AI? What tools do we have to do this?

This series of articles will share insights into how Human Learning Ecology can be used to not only understand the threats and opportunities of AI, but how to design a better world, one that is designed for all of humanity and life.

Resources to Support Learning

The resources related to AI seem unlimited right now and grow daily. I have curated a provisional list of resources I found most helpful to understand the current situation and which support a Human Venture inquiry approach. This list will continue to grow and change. Link to AI Resource List 2024.

Resources Cited in this Article

- Acemoglu, Daron. 2021. “The Harms of AI.” The Oxford Handbook of AI Governance.

- Anyoha, Rockwell. 2017. “The History of Artificial Intelligence.” Science in the News, Harvard.

- Berners-Lee, Tim. 2024. “Marking the Web’s 35th Birthday: An Open Letter.” Medium.

- Christian, Brian. 2020. “The Alignment Problem, Machine Learning and Human Values.” W. W. Norton & Company.

- Hagendorff, Thilo. 2022. “Blind Spots in AI Ethics.” AI and Ethics.

- Harris, Tristan, and Aza Raskin. 2023. “The AI Dilemma.” Your Undivided Attention (podcast), March 24, 2023.

- Mokyr, Joel. 2002. “The Gifts of Athena: Historical Origins of the Knowledge Economy.” Princeton University Press.

- Norman, Don. 2023. “Design for a Better World.” The MIT Press.

- “The Human Venture & Pioneer Leadership Journey Reference Maps” by Action Studies Institute. Calgary, Alberta, 2019.

Natalie Muyres is a Human Venture Leadership Associate and current Co-chair of the Human Venture Institute. She is an independent applied organizational and design anthropologist, consultant, project manager, and organizational change management professional in Calgary, Alberta.

Human Venture Leadership is a non-profit learning organization, providing proven educational programs that enable and grow the leadership capacities of individuals, communities and our global society. The Human Venture Institute is a research hub whose primary function is to cement and extend Human Learning Ecology.